08. Adding Depth

Adding Depth

So far you have seen how the RGB sensor in an RGB-D camera works. Let's now have a look at the depth sensor to see how it enables an RGB-D camera to perceive the world in three dimensions.

Most RGB-D cameras use a technique called Structured Light to obtain depth information from a scene.

This setup functions very similar to a stereo camera setup but there are a couple of major differences.

In a stereo setup, we calculate depth by comparing the disparity or difference between the images captured by Left and Right cameras. In contrast to that, a structured light setup contains one projector and one camera .

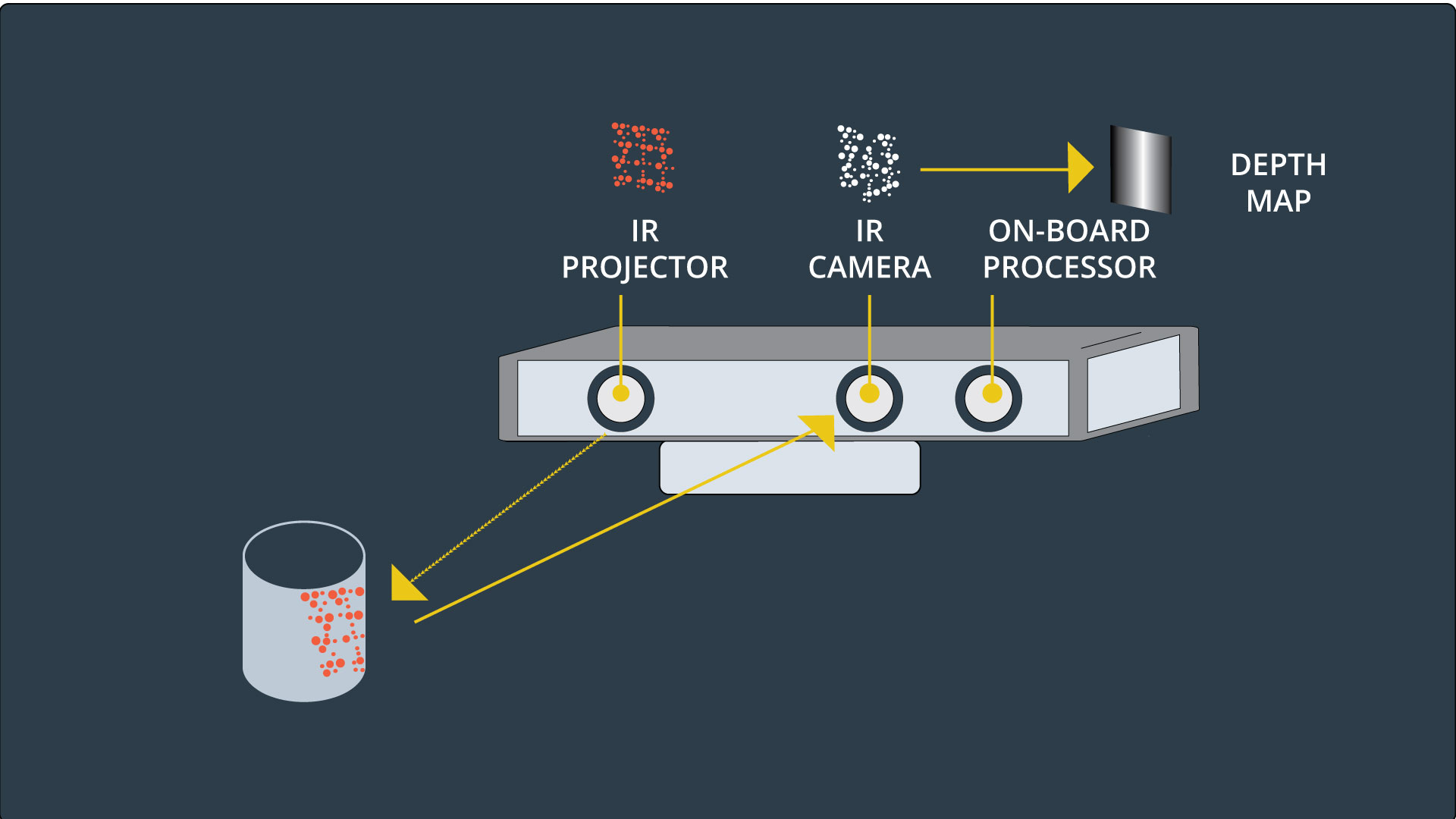

Here we can see how the depth map is generated from the RGB-D camera:

The projector projects a known pattern on the scene. This pattern can be as simple as a series of stripes or a complex speckled pattern.

The camera then captures the light pattern reflected off of the objects in the scene. The perceived pattern is distorted by the shape of objects in the scene. By performing a comparison between the known projected pattern (which is saved on the sensor hardware) and the reflected pattern, a depth map is generated much like a stereo camera.

Below is an example of a projection map that is placed over a scene in order to map out the correct pixel depths.